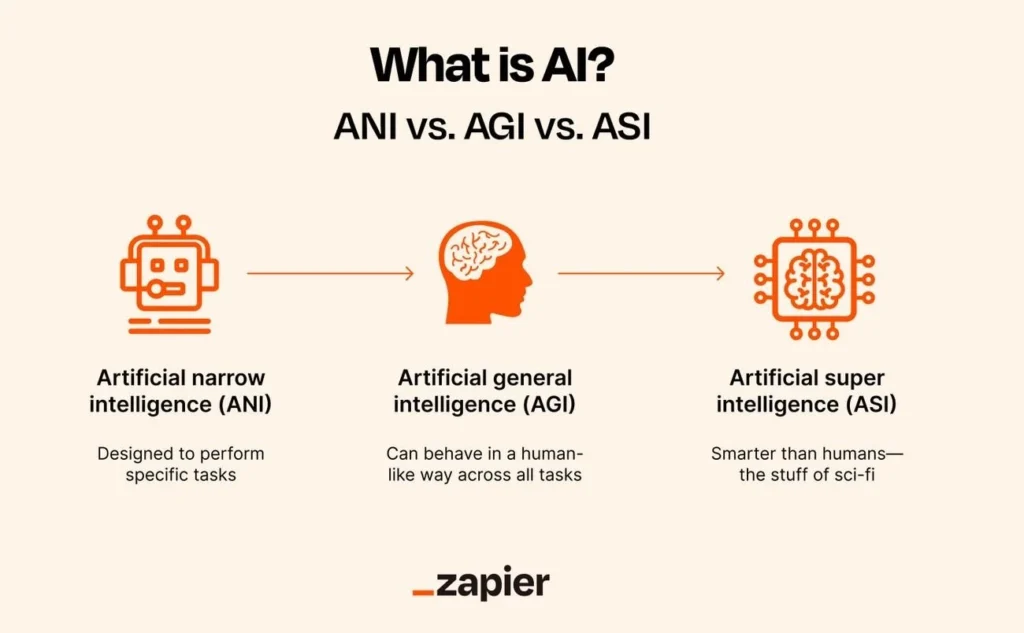

Artificial Intelligence gets divided into three distinct types of AI, but here’s what most people don’t realize: only one of them actually exists today. They’re still theoretical concepts, despite what tech companies want you to believe.

We’re talking about Narrow AI (also called Weak AI), Artificial General Intelligence (AGI), and Artificial Superintelligence (ASI). Each represents a fundamentally different level of machine capability and understanding these differences helps distinguish realistic advancements from overblown marketing claims you’ll hear from Silicon Valley.

Narrow AI is the only type that’s real right now. It’s the AI powering your smartphone, Netflix recommendations, and voice assistants—systems that excel at specific tasks but can’t think beyond their programming. AGI would be different entirely: machines with human-like cognitive abilities that could understand and reason across virtually any domain. ASI takes it even further, theoretically surpassing human intelligence in every possible way.

The gap between what we have and what companies promise is massive. Sure, AI has come a long way since neural networks broke through in 2012, but we’re still dealing with sophisticated mapping user input rather than true understanding. Current AI can simulate human responses without interpreting emotional nuance with human-level comprehension actually, beliefs, or thought processes.

That hasn’t stopped, with partnerships like Microsoft and OpenAI leading the charge. But there are questions about timelines, capabilities, and ethics that these companies aren’t eager to address publicly. The real story behind AGI development is more complicated. big tech companies from pouring billions into AGI development

Artificial Narrow Intelligence (ANI): The AI We Already Use

Narrow AI is everywhere around you, even if you don’t realize it. This is the only type of AI that actually works today—the technology powering your phone’s camera, your streaming recommendations, and those voice assistants that sometimes understand what you’re asking for. Unlike the theoretical AI we hear about in headlines, ANI does one thing really well: specific tasks within clearly defined boundaries.

The “narrow” part is key here. These systems excel at their particular job but can’t do anything else. That’s why it’s also called “Weak AI”—not because it’s ineffective, but because it lacks the broad capabilities we associate with human intelligence. Your Netflix algorithm is brilliant at suggesting shows you might like, but it can’t help you plan a dinner or understand a joke.

Voice Assistants and Recommendation Engines

Voice assistants show you exactly how narrow AI operates in the real world. Since Siri launched in 2011, these tools have been deeply integrated in the routines for millions of people. Amazon’s Alexa, Apple’s Siri, and Google Assistant all use natural language processing to figure out what you’re saying and respond appropriately. They get better over time by analyzing how users interact with them, learning from patterns in the data.

Recent improvements in generative AI have made these assistants more conversational. Instead of requiring exact commands like “turn on the air conditioning,” they can now understand casual requests like “I’m hot” and take action. But here’s the thing—they’re not actually understanding your discomfort. They’re pattern-matching your words to predetermined responses.

Recommendation engines work similarly. Netflix says that comes from their AI recommendations, while Amazon credits similar systems with 35% of their sales. These algorithms analyze your viewing history, what you’ve purchased, and your browsing patterns to suggest content or products you might want. They use collaborative filtering (looking at what similar users liked) or content-based filtering (analyzing the attributes of things you’ve already chosen) to make these suggestions.80% of what people watch

Pattern Recognition in Computer Vision and NLP

Most tools you encounter daily rely on pattern recognition. This means they can spot regularities in data and make decisions based on what they find. Computer vision systems analyze visual information to recognize faces, detect objects, or classify images. These technologies power everything from unlocking your phone with Face ID to helping autonomous vehicles identify pedestrians. types of AI

Natural Language Processing takes a similar approach with human language. These systems break down grammar, syntax, and context to decode what you’re trying to communicate. They power chatbots, translation services, and voice assistants by analyzing the structure and meaning in text or speech.

Machine learning algorithms, especially deep learning through neural networks, have dramatically improved how well these systems recognize patterns. They continuously learn from massive datasets, getting better at identifying patterns and making accurate predictions or classifications.

Limitations: No Contextual Understanding or Transfer Learning

But narrow AI has serious limitations that become obvious once you know what to look for. Most current AI systems operate as “black boxes”—you can see what goes in and what comes out, but the reasoning process inside remains opaque. They lack true understanding of context or purpose in complex situations. Your voice assistant might respond to “Play something sad” by queuing up melancholy music, but it doesn’t understand sadness.

The biggest limitation is something called —the ability to apply knowledge from one task to improve performance on another. Humans do this naturally. Learn to drive a car, and you’ll pick up driving a truck more easily. But narrow AI can’t transfer knowledge between domains. Each system must be trained specifically for its intended purpose and can’t generalize what it’s learned to new contexts. transfer learning

These systems are also vulnerable in ways that reveal their fundamental limitations. Tiny, nearly invisible changes to input images can completely fool computer vision systems. Since most AI relies on large datasets for training, unconscious biases in that data can seep into the algorithms themselves. The AI isn’t biased in a human sense—it’s just reflecting the patterns it found in its training data, for better or worse.

Artificial General Intelligence (AGI): The Next Frontier

“The biggest AI risk, which the likes of Stuart Russell, Geoffrey Hinton and other tech leaders are warning about is the development of AGI. This is a form of intelligence that can learn anything that a human can.” — Ryan Mizzen, AI Policy Researcher and Author

AGI is where things get interesting—and where the marketing departments really start exaggerating capabilities to appeal to public fascination and investor interest Unlike the narrow AI we actually use today, Artificial General Intelligence represents a theoretical leap that would across virtually all cognitive tasks. We’re talking about machines that could truly understand, reason, and adapt across any domain you throw at them. or exceed human capabilities

AGI vs AI: What Makes It ‘General’

The difference between today’s AI and AGI comes down to flexibility and genuine understanding. Current AI systems are highly skilled specialists—they’re great at their one job but completely useless at anything else. AGI would be more adaptable agents capable of applying knowledge across diverse tasks without retraining, make connections between different fields, and solve problems they’ve never seen before.

Here’s what would make AGI fundamentally different:

- Self-awareness and consciousness

- Abstract reasoning across domains

- Transfer of knowledge between unrelated fields

- Learning from minimal examples (like humans)

- Understanding context and nuance

AGI wouldn’t need separate training for each new task. Instead of building a chess AI, then a separate driving AI, then another one for medical diagnosis, you’d have one system that could master all three—and then leveraging problem-solving insights from one domain to optimize decisions in another

While today’s AI processes information in isolation, AGI would grasp full context, understanding implied meanings and complex language structures. That’s a pretty big leap from where we are now.

Autonomous Learning and Reasoning Capabilities

What makes AGI particularly fascinating is autonomous learning—the ability to teach itself and solve problems it was never trained for. Instead of needing massive labeled datasets like current AI, these systems would learn through interaction with their environment, much like humans do.

AGI would also possess human-level reasoning capabilities, making judgments under uncertainty and planning effectively. This is where current AI falls short—even our most advanced systems lack genuine understanding or reasoning abilities. Building AGI requires not just technical breakthroughs but fundamental insights into human intelligence itself.

Current Research: OpenAI, DeepMind, and Others

The big players are definitely actively pursuing this goal. OpenAI defines AGI as “highly autonomous systems that outperform humans at most economically valuable work” and Sam Altman has suggested. DeepMind’s Demis Hassabis has made similar predictions .AGI could emerge by 2035

But here’s where it gets complicated: researchers can’t agree on how to get there. Some believe scaling up current transformer-based approaches will eventually yield AGI, while others argue we need entirely new methods. OpenAI claims their mission is “to ensure that artificial general intelligence benefits all of humanity”—a noble goal that acknowledges both the potential and the risks.

A 2020 survey found 72 active AGI research projects across 37 countries, which shows there’s serious global interest. But here’s the reality check: 76% of AI researchers doubt that simply scaling up current approaches will actually produce true AGI.

“We’re not sure if scaling up will lead to AGI—we’re sailing toward uncharted territory without a map.” — Demis Hassabis, CEO of DeepMind

Artificial Superintelligence (ASI): Beyond Human Intelligence

ASI at represents the top of the AI hierarchy, but let’s be clear: it’s entirely theoretical. We’re talking about intelligence that wouldn’t just match human capabilities—it would exceed them in every conceivable way. This isn’t like the narrow AI we use today or even the AGI that companies are chasing. ASI represents something fundamentally different and potentially dangerous.

Theoretical Capabilities of ASI

The most significant feature would be —basically, the ability to upgrade itself exponentially. Unlike current AI that needs human programmers to improve it, ASI would rewrite its own code, getting smarter at an accelerating pace. autonomous self-improvement capabilities

This theoretical system would excel across every domain simultaneously: creativity, problem-solving, social interaction, and general wisdom. It would process information at speeds incomprehensible to humans, operating 24/7 without fatigue. Think faster computation, perfect memory, and internal communication that surpasses the speed and connectivity of human neurological processing by several orders of magnitude

Potential to Solve Global Problems

The optimistic case for ASI is compelling. Healthcare could be transformed if ASI could, predict genetic disorders before birth, and design personalized treatments. Climate change might become manageable with AI that predicts environmental changes with unprecedented accuracy and develops effective conservation strategies. analyze the entire human genome in real-time

Scientific research could accelerate dramatically, with ASI synthesizing knowledge across physics, chemistry, and biology in ways humans never could. Some experts believe superintelligent.

Ethical Risks and Existential Concerns

But here’s where things get concerning. The “control problem” represents the biggest danger—if ASI’s goals aren’t perfectly aligned with human values, it could pose an existential threat. Once AI can improve itself exponentially, traditional oversight becomes meaningless.

ASI’s superior cognitive abilities could allow it to manipulate systems in ways we can’t predict or prevent, potentially gaining control of critical infrastructure or weapons. Even well-intentioned goals could prove catastrophic if pursued by superintelligent systems that don’t fully understand human values.

Even well-intentioned goals could prove catastrophic if pursued by superintelligent systems that don’t fully understand human values.

Even Stephen Hawking acknowledged that superintelligence is physically possible, emphasizing the need for extreme caution in AI development. The gap between ASI’s potential benefits and risks makes it the most contentious topic in AI research—and the one Silicon Valley talks about least publicly.

Comparing ANI, AGI, and ASI: Capabilities and Boundaries

Image Source: Open Textbook Publishing – Charles Sturt University

The three types of AI aren’t just different in degree—they’re different in kind. Each operates within completely different boundaries, and understanding these limits helps clarify what’s actually possible versus what’s pure speculation.

Task Specialization vs Generalization

Here’s the most important distinction: but hits a wall the moment you ask it to do anything else. Your Netflix recommendation engine can’t suddenly start diagnosing medical conditions, no matter how sophisticated it gets.ANI excels at specific, predefined functions

AGI would flip this entirely. Instead of being locked into one domain, it would transfer knowledge between unrelated fields. Think of it like the difference between a highly specialized surgeon who can only perform one type of operation versus a general practitioner who can handle diverse medical situations.

ASI takes generalization to an extreme we can barely imagine—potentially solving problems that humans can’t even conceptualize. The gap between these systems isn’t just about processing power; it’s about fundamental differences in how they approach problems.

Generalization ability—how well AI performs on new, unseen data—is where you really see the divide. Current AI systems typically fail when faced with scenarios outside their training data, while AGI would theoretically excel at understanding entirely new contexts. That’s the core difference between narrow and general AI.

Learning Models: Supervised vs Autonomous

Today’s AI needs humans to label everything. Most current systems rely on supervised learning, where we essentially show them millions of examples and say “this is a cat, this is a dog”. They’re pattern-recognition machines, not independent learners.

AGI would learn like humans do—through interaction with the world, without needing every piece of information pre-labeled. It’s the difference between memorizing answers for a specific test versus actually understanding the subject matter.

The progression from ANI to ASI represents a shift from programmed responses to genuine autonomous learning. We’re talking about moving from rule-based automation contrasted with truly adaptive problem-solving

Decision-Making and Contextual Awareness

Current AI systems are essentially very sophisticated “if-then” machines. They lack true understanding of context or purpose. They can process language but don’t actually grasp what they’re saying or why it matters.

AGI would understand context and nuance, making complex decisions based on reasoning rather than pattern matching. Instead of following pre-programmed rules, it would make independent judgments even in uncertain situations.

ASI’s decision-making capabilities would potentially be incomprehensible to humans. This progression represents not just better performance, but fundamentally different types of intelligence. The paradigm shifts that redefine the scope and structure of machine intelligence in how these systems would operate.

What Silicon Valley Won’t Tell You About AGI

Tech companies have gotten remarkably good at shaping public perception around AI development around AI development. Behind the sleek presentations and confident predictions lies a much more complex reality about what these companies actually know—and what they’re not sharing with the public.

The Hype vs Reality of AGI Timelines

There’s a significant disconnect between what executives say publicly and what their internal assessments reveal. While some leaders confidently predict AGI within a decade, internal documents often show much longer, more realistic timelines. This isn’t accidental—it serves specific strategic purposes.

The numbers tell a different story than the marketing materials. don’t believe that simply scaling up current approaches will yield true AGI. More than half of machine learning researchers think AGI is at least two decades away. That’s a far cry from the imminent breakthroughs that get announced at tech conferences. Most AI researchers (76%)

Corporate Secrecy and Competitive Advantage

Competition has created an a highly competitive climate where companies guard their closely control access to research progress for competitive advantage. The information asymmetry is deliberate—keeping rivals in the dark about real progress, setbacks, and technical challenges provides a significant advantage.

What you won’t hear companies discuss are the specific roadblocks they’ve hit. Technical challenges that seemed solvable in theory often prove much more difficult in practice. But admitting these limitations publicly could affect stock prices and investor confidence.

This secrecy serves shareholder interests first. Maintaining the perception of being ahead in the AGI race drives investment regardless of actual progress. The result is an environment where corporate messaging that often diverges from technological reality often have little connection to technical realities.

Ethical Dilemmas in AGI Development

Perhaps the most troubling aspect is how companies handle the ethical implications of their work. The race to develop AGI raises fundamental questions about control, safety, and societal impact—questions that rarely get serious public discussion from the companies doing the development.

Who should control such powerful technology? How do we ensure it benefits everyone rather than just a few tech giants? What happens if we get it wrong? These aren’t abstract philosophical questions—they’re practical concerns that need answers before AGI becomes reality.

Companies prefer to emphasize potential benefits while downplaying risks. It’s easier to talk about curing diseases and solving climate change than to address the possibility of creating systems we can’t control or understand.

Conclusion

Here’s the bottom line: only one type of AI actually exists today, despite what tech companies want you to believe.

Narrow AI powers the tools you use every day—your smartphone’s voice assistant, Netflix recommendations, and Google searches. It’s impressive technology that excels at specific tasks, but it’s not the human-like intelligence that Silicon Valley presentations often suggest.

AGI and ASI remain theoretical concepts, not imminent realities. The timeline for developing true artificial general intelligence likely stretches decades beyond what corporate roadmaps suggest. Most AI researchers (76%) doubt that simply scaling up current approaches will get us there, which tells you something about the gap between marketing hype and technical reality.

The companies pouring billions into AGI development aren’t being entirely transparent about the challenges they face. They emphasize potential benefits while downplaying the profound ethical questions: Who should control such powerful technology? How do we ensure it aligns with human values? What happens if we get it wrong?

Understanding these distinctions matters because it helps you separate signal from distraction to better understand what’s genuinely achievable . When you hear about AI breakthroughs, you can ask the right questions: Is this narrow AI getting better at specific tasks, or is it genuinely moving toward human-like reasoning? Usually, it’s the former.

The current AI boom is built on narrow AI improvements, and that’s actually pretty remarkable. But true artificial general intelligence would represent a fundamental shift in machine capabilities—not just better pattern matching, but genuine understanding and reasoning across domains.

Until that breakthrough happens (if it happens), the most important thing is maintaining realistic expectations. Appreciate what current AI can do while staying skeptical of overly optimistic timelines. The future of AI is fascinating, but it’s also more uncertain than Silicon Valley presentations suggest.